Human-machine Interaction Ethics

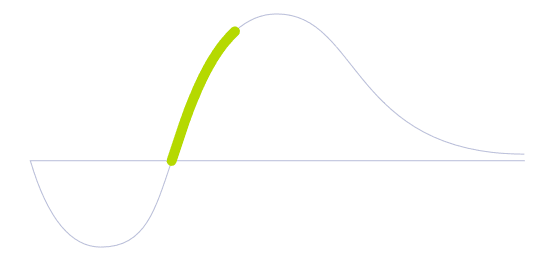

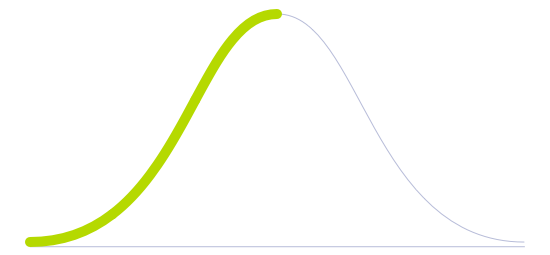

Technology Life Cycle

Marked by a rapid increase in technology adoption and market expansion. Innovations are refined, production costs decrease, and the technology gains widespread acceptance and use.

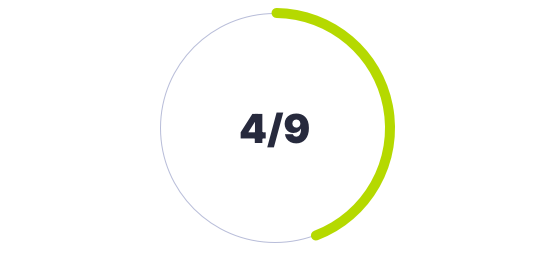

Technology Readiness Level (TRL)

Experimental analyses are no longer required as multiple component pieces are tested and validated altogether in a lab environment.

Technology Diffusion

Adopts technologies once they are proven by Early Adopters. They prefer technologies that are well established and reliable.

This ethical framework would help determine the limits of programming and applications of AI, what should be allowed, and what uses should be universally forbidden or regulated. Its overall goal is to ensure that technology is developed and used in a way that respects human dignity, autonomy, and privacy. Furthermore, it would support providing guidelines and best practices for HMI designers and developers to create ethical and responsible technology.

Some current topics that are already being considered as AI gets more integrated into humankind are privacy, bias and fairness, safety, transparency, explainability, human-centric design, regulation, and governance. As artificial intelligent beings might unfold autonomously, ethical values such as responsibility, transparency, accountability, and incorruptibility should be depicted as algorithms and programmed into their system to work accordingly. As soon as artificial beings acquire a certain level of autonomy, this set of ethical configurations would help machines understand how human society functions and possibly integrate them as an extension of humankind.

As humans interact with machines, the resulting metrics would give insights to sharpen the way this introduction should be made. For instance, from a young age, children could learn how to behave and treat robots while in school. Adults and late adopters, on the other hand, could be taught how to act and behave with specific training courses and educational material. By analyzing these outputs, it is expected that humans would be able to develop affinities and create a more symbiotic acquaintance for both humans and robots. However, another matter to keep in mind would be the possible future creation of a hierarchy system between artificial and organic beings.

Future Perspectives

Many ethical debates are likely to surface as soon as machines acquire their own consciousness or when robots become sentient. Questions should be employed, such as to what extent humans should treat artificially intelligent agents merely as tools or if machines would be able or not to gain sufficient power or rights. In front of this quandary, it is possible that an ideal ethical measurement would only come after years of human-machine interactions.

Image generated by Envisioning using Midjourney