Using AI and the Centaur Mindset to Assess Emerging Technology Impact

Artificial Intelligence is here to stay, and with that, new kinds of human-machine augmentation emerge, which we call Centaur Mindset. We at Envisioning have been working on a platform for creating and hosting AI-enhanced workshops. With this co-pilot tool, our goal is to augment workshop intelligence and expand the limits of creativity. Recently, we had the opportunity to test out this platform with our partner, GIZ, the main German sustainable development agency, with whom we have launched the GIZ techDetector platform.

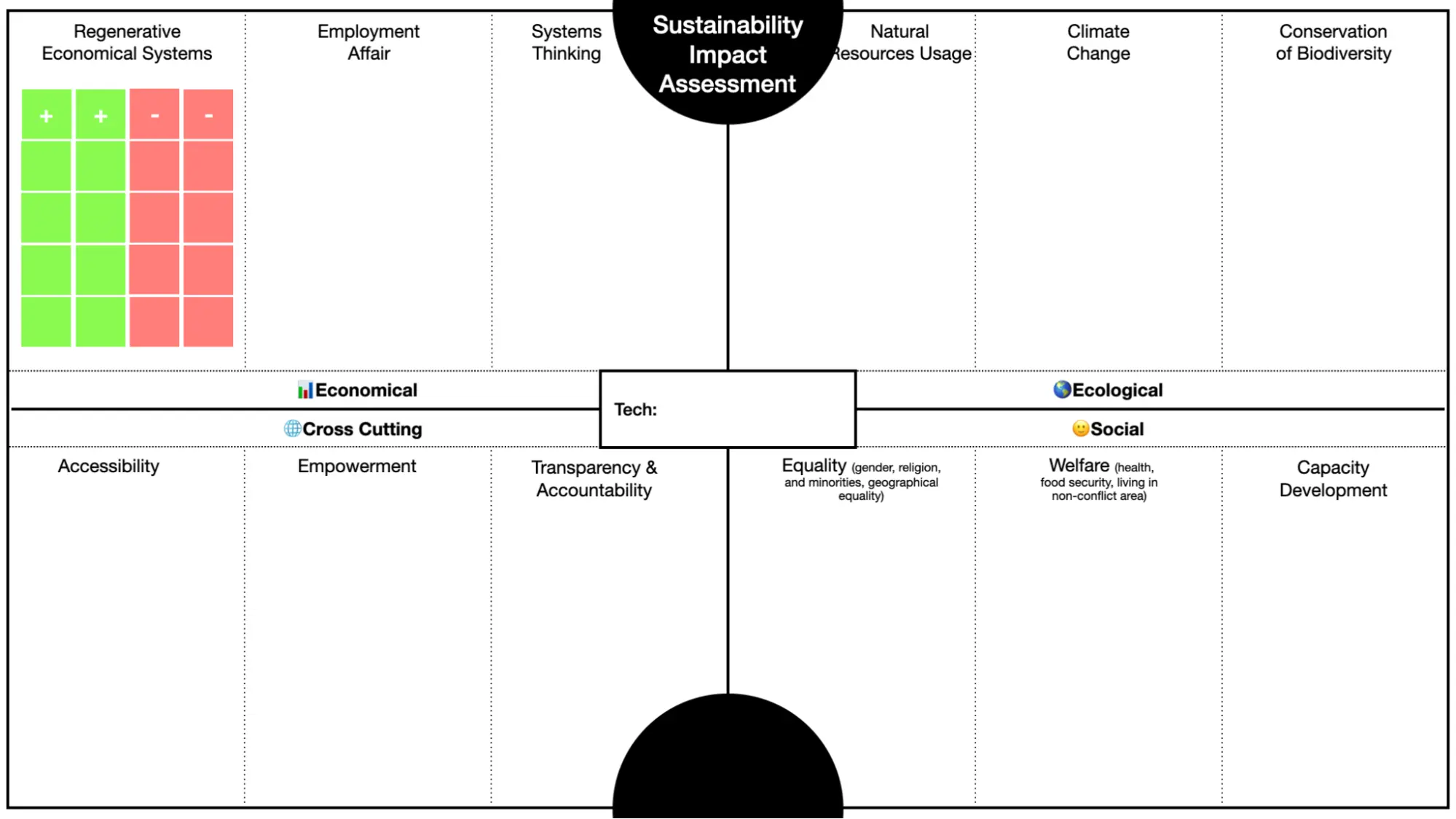

For this pilot, we were tasked with creating an AI-powered workshop for experts to assess the sustainable impact of emerging technologies for the techDetector. GIZ and Envisioning had already crafted internal assessment questions for different impact dimensions: Economic, Ecological, Social and Cross-Cutting (aspects dealing with accessibility, accountability and transparency). Our assignment was to translate this framework into a new format using our workshop-friendly interface powered by ChatGPT.

We started with some questions:

- Could AI help with this process, and how? Or would it make it more complicated?

- How is the process different when using this AI co-pilot?

- Which tasks in this process can AI be useful for?

Through plenty of collaborative work, trial and error, and a session full of learning, here are some highlights:

Measure Twice, Cut Once

The preparation phase differed slightly from your conventional workshop, as we didn’t only have to care for the (human) participants but also calibrate our AI platform for this specific project. We had to think of interesting prompt-based dynamics for the team while also keeping a red thread for the whole experiment.

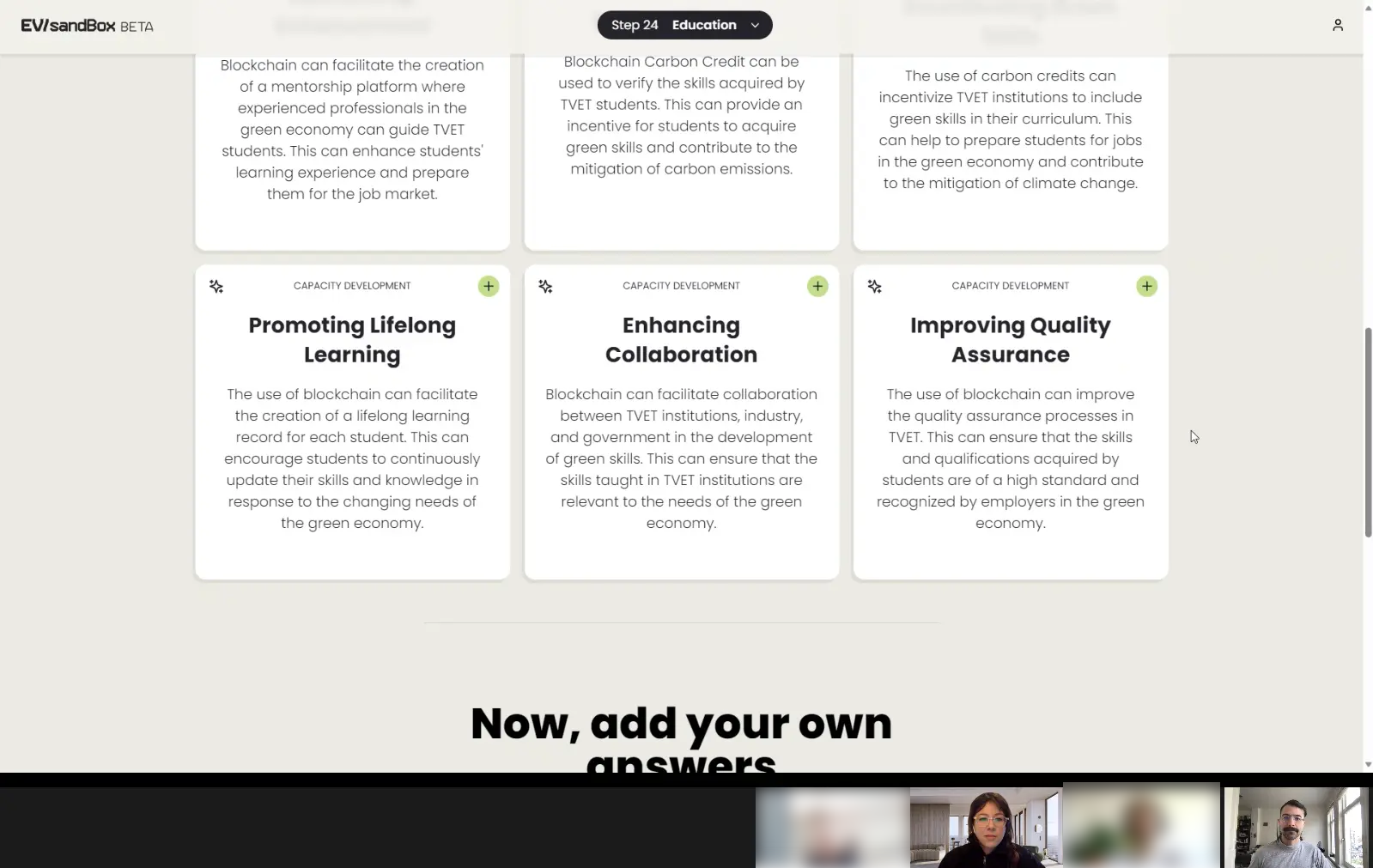

What started as a four-vector assessment, each with three subcategories, became a 24-step workshop, intercalating positive and negative impacts for each subcategory. This led to a focused analysis of each step: how could this technology improve this aspect? And how does this technology harm the same aspect? This repetitiveness supports the criticality of emerging technologies, moving beyond the dystopian/utopian duality many times ingrained in futures thinking.

For our co-pilot to run smoothly, a great deal of prompt engineering and fine-tuning went into the preparation process. Using the right words, asking clearer questions, disambiguating terms, and tweaking prompts to trick AI-bias and negative prompts also brought us richer answers on each round of the assessment. For instance, when asking about regenerative economic systems, ChatGPT brings results about more ecological aspects. Or “education” tended to generate results focused on children's education when we also wanted to see its impact on training and higher education. Through testing and polishing our prompts, we were able to minimize these biases. Also, this initial time investment for the preparation phase pays off, especially when considering this format could be streamlined with other teams, technologies, and assessments.

Centaur in Action

The role of the participants in this AI-enhanced dynamic differed from a traditional assessment, where they would rely only on their own expertise as if starting with a blank canvas. With the multiple impact possibilities brought by AI in a few seconds, experts could use their knowledge and time to validate options and build upon them. All of the surveyed experts considered the platform to be useful for technology impact assessment: half of them said it enhanced their creativity, while the other half said it helped out with some ideas. Some experts also reported the tool generated good ideas very quickly but created more general and broad scenarios, lacking depth. Working with Large Language Models like ChatGPT, that kind of outcome is expected, and that’s why the role of the experts is essential.

The same participants were at times impressed by connections they hadn’t thought of and, sometimes in the same round, giggling at farfetched answers. Blindspots and hallucinations had to pass by the sieve of experts in the loop. This shows how AI could not do the work by itself, as it still needs human and professional input. It also optimized human effort and enhanced expert knowledge by broadening possibilities and guiding conversations.

Efficiency Shift

When we started working on this project, we expected this tool to speed up the assessment process. The original idea was for the whole team to do the entire journey together, but quickly, we realized it would become an odyssey to analyze just one technology and its impact. We divided the team into four groups, made each of them responsible for a different impact dimension, and then each group could report back. The group evaluated the platform as very intuitive and time-saving: plenty of scenarios were provided in seconds. However, the time we saved in the process was added back as richer, more profound and focused discussions about sustainable impact, and the entire team felt the 2-hour workshop was not enough. Also, the same process could now be replicated in multiple workshops, exploring different technologies and a different set of experts, perfecting the process and tool on each round.

Over-reliance Paradox

If you have ever used your calculator to do basic math, you are familiar with this feeling. While starting with AI-generated answers can be quite convenient and quicker, it can also become a bad habit to let it do all the work for you. Our way to deal with this “crutch effect” would be to add different workshop dynamics, like making it mandatory to add user-generated answers, starting with human input, or using the responses as conversation starters, which could lead the group to new areas. Hence, this multi-player and facilitated dynamic matches well with this format, helping find balance in this paradox.

Experiment and Learn

As AI and its applications evolve by the day, so do the possibilities and learnings from tools like these. The bottom line is that workshops and sprints will not be the same when AI permeates the process, and our goal is to make the most of human-machine potential in these collaborative dynamics. If you have ideas to run other experiments with Envisioning, e-mail us so we can talk about building your own bespoke AI-powered workshop.