It's a fact that knowledge work has been going through some shake-ups in the past year, to say the least, with AI's quick adoption and evolution. As an emerging technology platform working for different industries, we are mainly used to knowing about emerging technologies rather than getting our hands dirty. This time it's different. After several months of studying and experimenting, we are reshaping our research process to incorporate AI, and we would like to share this work in progress with you. It's essential to start with some caveats.

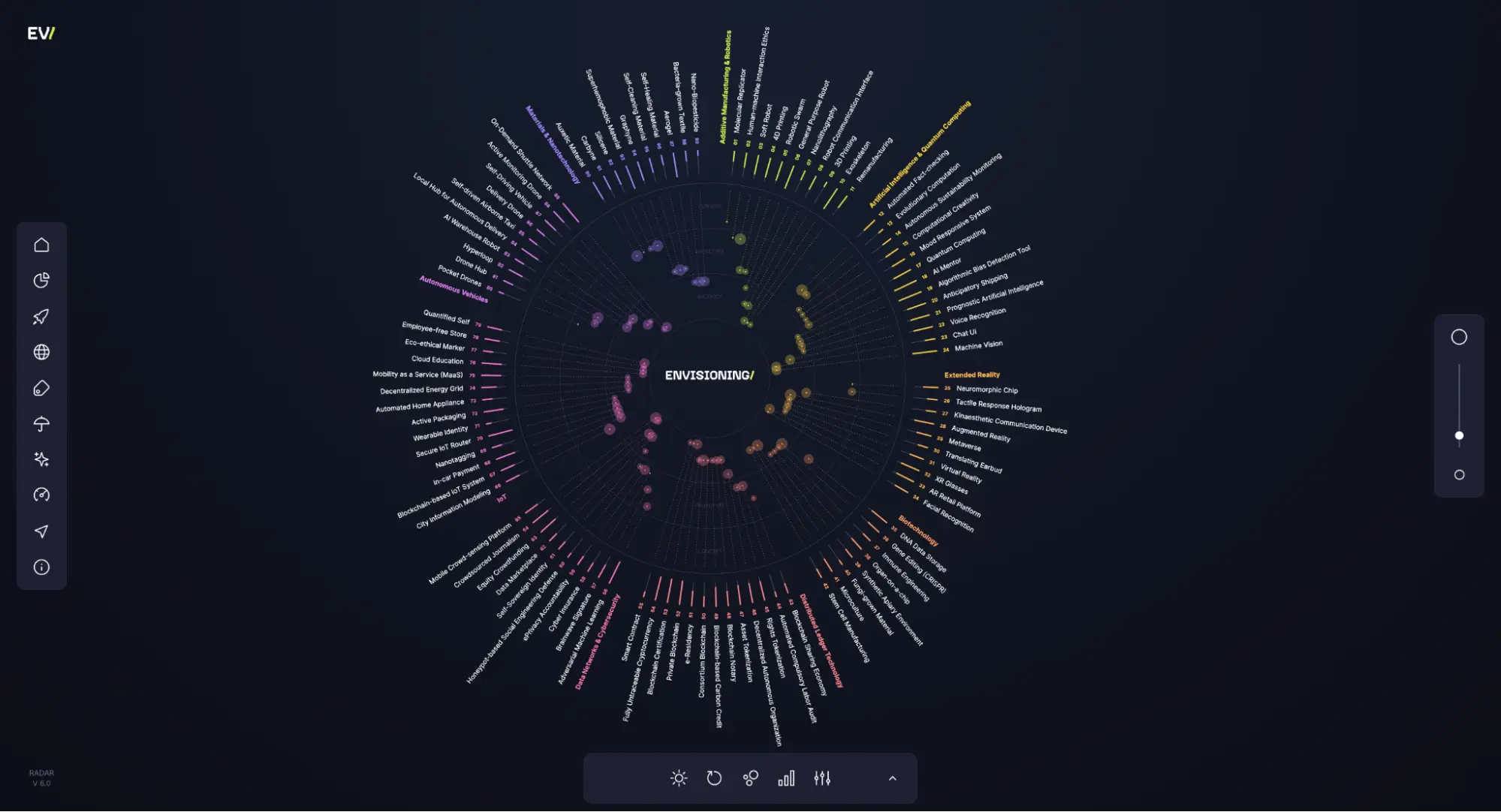

- Envisioning has built a tested methodology and a database over the past ten years, which gives us a solid base to work on and build upon.

- With AI's fast-paced updates, we realise this has become a liquid process, and we adapt and update it as new projects, discoveries, and updates come in. Maybe in a year, it will be quite different from what it looks like now (or it won't).

- We don't envision these tools substituting our researchers but enhancing, optimising and supporting our work.

- We are not experts in any specific technologies, and our approach is closer to the generalist side of the spectrum, making LLMs the perfect assistant for the task.

Now, let's get to it.

Diverging: Tech Scouting

When we first get a brief or idea for a project, we are usually talking about emerging technologies and the future of an industry or area. The first thing we do is comb through our database for technologies that could be important for the client. This allows us to get a mix of cross-cutting and specific entries, which we later validate at the granularity level and TRL (Technology Readiness Level) we are aiming for.

In this phase, we have found AI engines to be quite handy in helping us cover any blind spots: are techs missing in our selection or even in our database? Are there any fields we must include? GPT4 can be helpful with that, and we've also used Globe Explorer and Perplexity AI. Due to these innovations, we are able to save time and do more than one round of tech scouting and validation.

Converging: Crafting Content

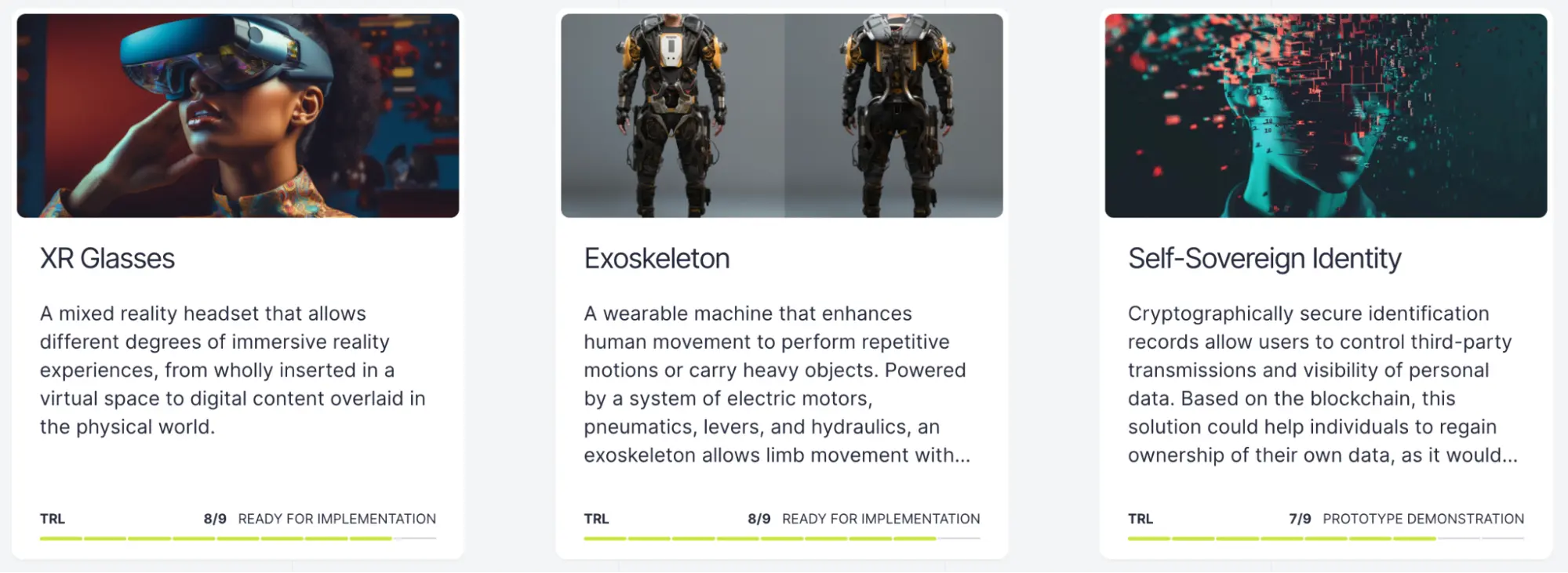

After we have fine-tuned the entry selection with our client, it's time to develop our content. This includes, for each technology: title, summary, description, use cases/applications, SDGs, TRL and other sections we see fit. Our latest methodology aims to follow a more straightforward approach in our descriptions, following a particular structure: What is this technology? How does it work? What problems does it solve? How does it impact the future of this industry? With a great deal of prompt engineering, we have transformed our methodology into a series of prompts, which play on the linguistic strengths of GPT4. On the one hand, it allows us to follow a pattern in our texts and keep them objective, while on the other, it gives us the flexibility to add different questions to the mix and adapt each technology description depending on the industry and project we are focusing on.

We have created a master prompt, which we improve over time, where we ask the questions mentioned before (or other questions), assign a role and determine the style in which the content should be written. We also have a master script page that allows us to deepen the conversation and add more complexity: Which SDGs could this technology help achieve and how? What are some current applications of this technology in industries X, Y and Z? Which organisations are working on its development? What could be some future applications? What are some unforeseen implications this technology could cause? We can even go off-script and ask more specific questions depending on the answers.

This effort allows us to follow a structure and a style within a project and optimise research time while offering flexibility in our research. These changes allow our research team to tap into a kind of Centaur Intelligence, meaning the role of the researcher has shifted in this human-machine interaction. Now, the researcher saves time crafting the content but also takes the role of editor and fact-checker (bias and hallucinations still happen, and it's fun to test how far it stretches.)

The editing phase is about filtering obvious statements and adding more up-to-date content or words that GPT might have missed, which might have come up when reading other materials found through our regular research: scientific articles, media content, reports, relevant explainers and other sources. It is also about making sure the material as a whole is not repetitive (apparently, GPT4 has some favourite adjectives, for instance).Grammarly has been a great assistant in finding synonyms, diversifying sentence structure, maintaining a style and inclusive language and avoiding typos and grammatical errors.

As for the fact-checking part, the process might differ depending on how ready the technology is and how familiar we are with it (some entries in our database have been updated for years). In that case, the process is more efficient, and we use the good old search to assess its current status, with sources and signals, to validate the generated information, assign a TRL and add more interesting cases to the entry. Here, we also have used GPT4 to summarise scientific papers and make their discoveries quickly accessible, which is especially useful for technologies with lower TRL or the ones that are still not buzzing in the media.

Imagining: Futures Aesthetics

One of the most fun (and important) parts of the process is creating images to illustrate our entries, and text-to-image AI has been of great help. It's an essential part of the process because it plays with the curiosity of our clients and readers and helps them envision different futures.

A couple of years ago, we had to rely on stock imagery, and while it was great for some things, it needed more diversity and uniform aesthetics. Using Midjourney allows us to follow a particular aesthetic in our projects, creating images with more diversity of characters and a mix of different views of the future. We do that by having a master prompt describing the style we want to follow and modifying it when we need to. For instance, we can move beyond that metallic techno-centric view of the future and play with varying aesthetics like solarpunk and cyberpunk.

Text-to-image AI also gives us the flexibility to efficiently personalise images to the industry of the project we are working on. It also allows us to play with more conceptual technologies, bringing them to life in an image. More important than that is bringing more nature and characters of all races, genders and ages to these futures visions.

We have noticed some partners are apprehensive about using AI-generated images in their projects and still prefer stock images, which is fine in some cases. We've also had some reasonable requests coming from clients, which you might consider when implementing it: avoid logos, avoid recognisable faces, avoid adding protected IP images to the engine, use the business account for more quality and IP protection, and use Stealth Mode.

For all of this process, we found it helpful to create our own methodology guidelines on a central wiki-style hub. At the start of every project, we duplicate the prompt and script page and make the necessary updates so we have a bank with all of our prompts and learnings over time. It's also handy to have this as part of the onboarding for new researchers so we can follow a determined framework and replicate our process.

Thank you for reading, and if you have found this a helpful process, please share it. If you're interested in collaborating with us, please reach out.